In the previous blog post, I discussed autocomplete with wild cards. That implementation was based on simple prefix tries, where each character in each word maintained a separate node. Insertion, removal, and traversal of the simplistic prefix trie is easy; We simply traversed along the path per character in the word being looked up. If we fell off the path, we knew that the word wasn’t in the dictionary; if we didn’t, we could determine whether the word was in the dictionary by checking the whether terminal = true, and further traverse to generate a set of all suffixes with that prefix.

In this blog post, I take that implementation one step further and implement insert, remove and traverse for a compressed prefix trie. What are the advantages of a compressed prefix trie? Essentially, the implementation is superior in every way except complexity compared with a simple prefix trie.

See the implementation here (and feel free to play with it): Search Part VIII: Compressed/Optimized Prefix Tries

Advantages of Compression

As stated above, the advantages of compression are numerous. Here are a few below:

- Significantly reduce memory footprint. In my personal tests, I end up using around 66% less memory

- Faster traversal and results generation. Since compressed tries can simply traverse along the value kept in each node vs having to traverse down the trie, lookup is significantly faster as well (up to 66% faster)

- Faster construction of the trie. The same advantages above apply.

What is a Compressed Trie?

A compressed trie builds upon a normal trie by eliminating redundant edges in the trie. In my implementation, I compress down each branch (representing each suffix). I could go even one step farther and use pointers to existing substrings in the trie. Using this approach, I’ve heard of compressibility/memory savings using only 16% compared with a full-blown trie.

The best way to explain how a compressed trie works is to compare the non compressed with the compressed versions (* represents terminal nodes):

2.1.7 :007 > a.prefix_trie.print

a

p

p

l

e*

b

a

n

a

n

a*

s*

m

a

n

g

o*

e

s*

s

t

e

i

n*

g

r

a

p

e*

p

i

n

e

a

p

p

l

e*

Total size: 39 nodes

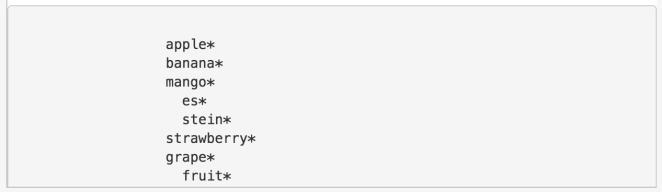

Compare this with a compressed trie:

apple*

banana*

s*

mango*

es*

stein*

grape*

pineapple*

=> nil

Total size: 9 nodes

Thus, right off the bat, you can see that the size has shrunk by 30 nodes, or ~ 75%. Of course, real memory savings is a little more vague, since each flattened node is now larger by holding a larger value.

What changed from the uncompressed to the compressed trie? Essentially, you can see that each branch of the trie that only had one child could be compressed into the same node. For example, pineapple is the only word that starts with p in the dictionary, thus each edge could be compressed down to one word.

In practice, a flattening algorithm would look like this:

var node = this;

while (node.num_children() === 1 && !node.terminal) {

//set children to child

var child = node.children[Object.keys(node.children)[0]];

var grandchildren = child.children;

node.children = grandchildren;

node.c += child.c;

node.terminal = child.terminal;

_.each(grandchildren, function(gc) {

gc.parent = node;

});

if (child.terminal) { break; }

}

This algorithm simply traverses up the chain, squashing any redundant edges along the way. Next, if we apply this across all leaf nodes, we can optimize the entire trie.

this.optimize = function() {

var self = this;

var queue = [];

Object.keys(self.children).forEach(function(k) {

queue.push(self.children[k]);

});

while (queue.length > 0) {

var node = queue[0];

node.flatten();

Object.keys(node.children).forEach(function(k) {

queue.push(node.children[k]);

});

queue = queue.splice(1);

};

};

The next step is to rewrite the traversal algorithm, because we can’t naively use each character in the word we are trying to look up to traverse each node. We need a way to tell if the current node we are at is a flattened node or not. If so, traverse into the word as far as possible and return where we all off, or if we’re done traversing, return the node. In practice, it’s a little more complex than that, and there are lots of tricky edge cases in the implementation. However, I’ve done the hard work for you:

/* traverses tree based on text */

this.traverse = function(textIn) {

var node = this;

var path = '';

var j = 1;

var dist = j;

var chars = textIn.split('').map(function(c) { return c.toLowerCase(); })

/* traversal of compressed trie */

for (var i = 0; i < chars.length; i++) {

c = chars[i];

if (node.flattened()) { // flattened

if (node.c[j] === c) {

path += c;

j++;

dist = j;

continue;

} else if (j < node.c.length) { //terminated early

return [path, undefined, node, j];

}

j = 1;

}

if (node.children[c]) {

path += c;

node = node.children[c];

//already added path above

} else {

return [path, undefined, node, dist];

}

}

var prefix = path;

if (node.flattened()) {

prefix = path.substr(0, path.length - j);

}

return [prefix, node, node, dist];

};

Essentially, this traversal algorithm does the following. It navigates through the trie for each character in the word being looked up. We go as far as possible until we have to fall off the trie, or have exhausted the characters in the word. After we finish this process, we return a 4-tuple (an array here) below:

# 1) the path taken thus far

# 2) the node traversed into

# 3) if no node traversed into, the last node seen

# 4) the distance into the last node seen

With this information, we have everything we need to either remove the node, insert new nodes from this point, determine whether the word exists in the trie, or continue traversing to complete the suffix set.

Online vs Offline Construction

By following this approach, we’ve derived the compressed trie data structure. However, we arrived at an offline algorithm for actual construction; that is, we need the entire corpus of words in the dictionary to construct the trie. Then, once we have constructed the entire trie, we need to traverse the entire trie again and flatten every branch, essentially doubling the amount of effort for trie construction. On very large datasets, this can be costly. This is known as an offline algorithm, or one that requires the whole dataset in order to construct the output. Contrast this to an online algorithm, which can produce the desired output incrementally. The implementation of the online algorithm for compressed prefix trie construction is very tricky. There are lots of edge cases, maintaining state so we know how far into the trie we’ve gone, and taking slices of prefixes so we can continue splitting nodes and swapping children. I’ll just describe it here, but you can see the implementation for the gory details.

Online Prefix Trie Construction Algorithm

- traverse as deep as we can go, using the above traverse method

- if we fall off the trie (i.e. traverse to a dead end)

- if we’re at a flattened node

- traverse as far into the node as possible, then create a new child node with the remainder of the word

- create a new child node with the remainder of the value that was in the node and point all existing children to that node

- if not

- insert a child node with the remainder of the word

- if we’re at a flattened node

- if we don’t fall off the trie

- if we reach and leaf node and have consumed all characters, simply set the node to terminal

- otherwise, determine how far we’ve gone in the current node

- create a child node with the remainder of the value of the node and point all existing children to that node

- create a child node with the remainder of the word

Benchmarking

Ok so now for some real world results (Based on a ruby implementation, hopefully to be open-sourced soon):

BEFORE:

Dictionary size: 86036. Running through creation 5 times.

user system total real

creation: 10.880000 0.290000 11.170000 ( 11.173094)

>avg: 2.176000 0.058000 2.234000 ( 2.234619)

Dictionary size: 86036. Running through prefix traversal 1000 times.

user system total real

prefix: 3.010000 0.010000 3.020000 ( 3.009824)

0.003010 0.000010 0.003020 ( 0.003010)

Dictionary size: 86036. Running through suffix traversal 10000 times.

user system total real

suffix: 12.020000 0.030000 12.050000 ( 12.050301)

0.001202 0.000003 0.001205 ( 0.001205)

Dictionary size: 86036. Running through brute force traversal 1000 times.

user system total real

brute force: 17.540000 0.230000 17.770000 ( 17.774003)

0.017540 0.000230 0.017770 ( 0.017774)

AFTER:

Dictionary size: 86036. Running through creation 5 times.

user system total real

creation: 4.760000 0.080000 4.840000 ( 4.879213)

>avg: 0.952000 0.016000 0.968000 ( 0.975843)

Dictionary size: 86036. Running through prefix traversal 1000 times.

user system total real

prefix: 2.130000 0.010000 2.140000 ( 2.149070)

0.002130 0.000010 0.002140 ( 0.002149)

Dictionary size: 86036. Running through suffix traversal 10000 times.

user system total real

suffix: 7.660000 0.020000 7.680000 ( 7.706711)

0.000766 0.000002 0.000768 ( 0.000771)

You can see that compressed trie construction is > 50% faster, prefix lookups are 33% faster, suffix lookups are ~40% faster. This is without spending much time optimizing either.

Finally, look at the memory differences:

# using the dictionary.json files # Uncompressed 2.1.7 :005 > a.prefix_trie.size + a.suffix_trie.size => 575978 (# nodes) # Compressed 2.1.7 :007 > a.prefix_trie.size + a.suffix_trie.size => 229498 (# nodes) Double this for maintaining the suffix trie as well for suffix matches.

Thus, we can see that our trie is about 60% smaller than the uncompressed trie. Awesome.

The trie in all its splendor:

I also had some fun and implemented a simple print function that lets you see what the finished trie looks like. I’ve included a sampling here:

* - terminal node

i*

n*

d*

o

c

i

l

ity*

e*

b

ility*

le*

trinat

ion*

e*

xyl*

ic*

-*

e

nglish*

uropean*

do-chinese languages*

briton*

aryan*

chinese*

l*

e

s*

n

t*

ly*

cy*

in*

rs

e*

ment*

e*

d*

a

tion*

ble*

w*

ment*

m

able*

it

e*

able*

ptable*

nesian*

aniline*

in*

or*

s*

gen*

ide*

phenol*

... and so on