In the previous search engine series, I demonstrated how to implement a naive fuzzy match using Levenshtein distance. I call it naive because the distance metric is based on matching the user’s query to matching words in the dictionary. In today’s blog post, I show how to extend that functionality across multiple words, taking into account spatial locality (an overloaded term) regardless of where they occur in the original document. I also show you how to add contextual highlighting of the user query of the search results.

Foreword: I’ve also done some refactoring to clean up the code a bit. I had begun writing the text search example using custom methods, but decided for readability sake to extend Array.prototype for some useful functionality. Another library out there that does pretty much the same array transformations I need is lodash, but I opted not to include it (no shortcuts!). However, where certain functions are implemented in standard javascript (ES5), I’ve used those (e.g. Array.prototype.filter). I think the code reads more cleanly now.

Multi-word fuzzy matches

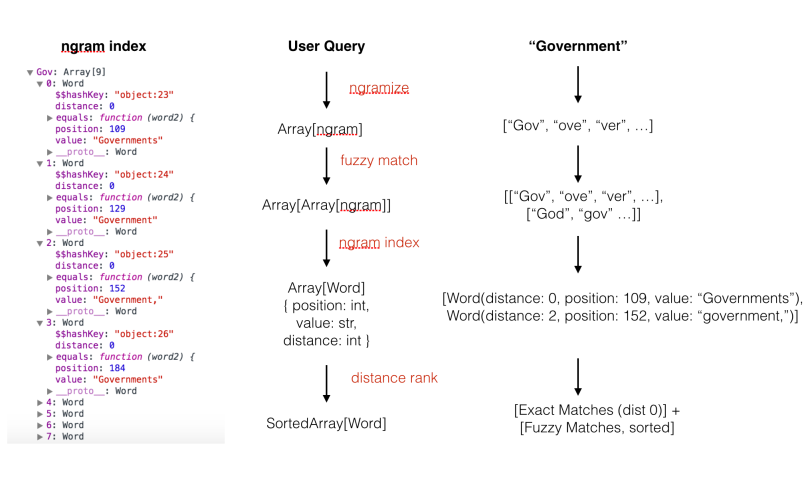

There’s no real secret to multi-word matches. For those keeping up with this series, if you look at the code, you’ll notice that it all builds on the last iteration. Multi-word search is no different. Previously, I keyed the ngram index using a list of ngrams formulated from the user’s query. Our search method followed this pattern:

user query > array of user query ngrams > array of fuzzy query ngrams > array potential matches > sorted array of word matches

See this image below (which I spent way too long creating):

You can see from this diagram that when the user entered in multiple words, we would never get good matches unless the words were adjacent. The reason is simple: Two different words will never have intersecting potential matches. While they could have potential fuzzy matches, this result is erroneous signal.

The easy and obvious fix for this is to split the user’s query by word, then conduct a fuzzy search through the index using all ngrams associated with those words. Much like we did for fuzzy searches with fuzzy ngrams, we move up one level of abstraction to N searches. This returns an array of array of Words, so we’ll need to flatten the array and then arrange them somehow.

Here is the sample implementation for this:

Note: as described above, I rewrote some of the methods to make this easier to read (less nested parentheses)

function getRankedWords(word) {

var ngrams = Object.keys(ngramize({}, word, 0));

var fuzzyNGrams = ngrams.map(keyOnFuzzy)

.flatten()

.unique()

.filter(function(ngram) {

return ngrams.indexOf(ngram) === -1;

}); //only take unique fuzzy ngrams

var exact = ngrams.map(keyOnHash);

var fuzzy = fuzzyNGrams.map(keyOnHash);

return rank(exact, fuzzy, word) || [];

};

if (!textIn || textIn.length < 3) return [];

return textIn.split(' ').map(getRankedWords).flatten();

Ranking result sets

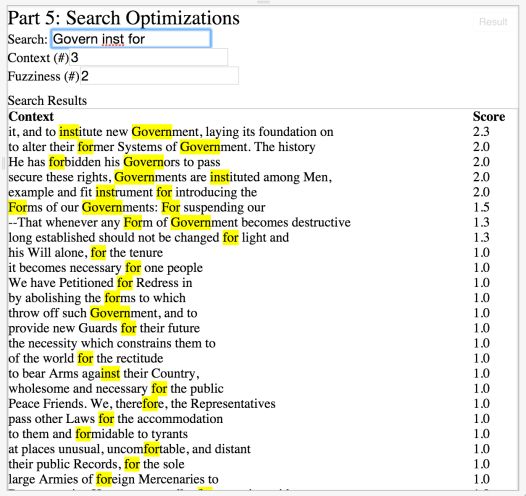

Since each search returns a set of exact and fuzzy matches, this implementation necessitates (as it did for fuzzy matches) another ranking scheme to arrange the results in order of relevance to the user. In my implementation, I opted for a quick and dirty solution of ranking via spatial locality (I know this is an overloaded term related to caching schemes). Essentially, based on the number of words of context, if any two results fall within the range, they form the same “result.” Note that since we are no longer matching 1 word at a time, I created a new class called a Result, which contains the string matching the search result’s context, gave it a new score (just an inverse distance calculation) and also added highlighting to the context.

// Result: has a context and score

var Result = function(context, score, highlighted) {

this.context = context;

this.score = score;

this.highlighted = highlighted;

};

With this, we define the interface for a search result, and are abstracted away from words. You can see how using abstractions like this can be very powerful. Instead of words, we may create indices that point to documents. Then for each document, we can reference the correct index. In this manner, we can have distributed indices across a cluster and get faster lookups than storing all indices in memory on one machine.

Here’s a sample implementation:

// Rank sets of results

// Algorithm

// Traverse through flattened results, if the D (delta)

// between adjacent positions i < $context, combine results

// and aggregate scores (i.e. more relevant)

var rankSets = function(sets) {

function score(distance) { return distance === 0 ? 1 : 1/(distance + 1); };

var sorted = sets.sort(Word.prototype.sortByPosition);

if (!sorted || sorted.length === 0) { return []; }

var prev = sorted[0];

var results = [];

for (var i = 1; i < sorted.length; i++) {

var curr = sorted[i];

var _score = score(prev.distance);

var lowPosition = prev.position;

var highPosition = lowPosition;

while (curr.position - prev.position <= $scope.context) { //spatially close

i++;

highPosition = curr.position;

_score += score(curr.distance);

prev = curr;

curr = sorted[i];

}

var _context = $scope.dict.slice(lowPosition - $scope.context,

highPosition + $scope.context).join(' ');

results.push(new Result(_context, _score, highlight($scope.textIn, _context)));

prev = curr;

}

return results.sort(Result.prototype.sortByScore);

};

You can see that the ranking algorithm is simple. I start by sorting the array of Words by position in the document. Recall that position in a Word is a pointer to its location in the document. Since we already computed a distance metric per Word compared with the corresponding word in the user’s query, we can reuse that computation to form a “score.”

The score method itself is also very simple. It’s just an inverse distance metric, such that “exact matches” return a score of 1, whereas imprecise matches return a score of 1/(distance +1).

Finally, as I traverse through the array of Words, when I find two words where the distance of the positions (in words) is less than the context, I increment the high and low position that was used to compute the context within the document. Then I aggregate the scores of the adjacent Words. If a Word has no neighbors within that range, the most recent positions are used to compute the Result. In the case where the Word is isolated, this just returns the same context as before. Where a Word had neighbors within range, this returns a context with a max range of (context * 2 + (highPosition – lowPosition)) words. We could truncate this to make all contexts the same size (but I opted not to).

Finally, since we’ve been iterating through our results and combining Words to make new contexts, we have to sort the set by score.

Highlight all the things

Highlighting is also a pretty easy add. When I instantiate a Result and push it into our results array, I have all the information I need to compute whether the result should contain highlighting or not. Simply by breaking apart the users query and using a string replace, we can return a html fragment ready to be rendered by the browser.

Sample implementation:

var highlight = function(textIn, context) {

return context.replace(

new RegExp("(" + textIn.split(' ').join('|') + ")", "gi"),

"<mark>$1</mark>");

};

That’s pretty much all it requires with this implementation.

Further optimizations:

- As this series is only meant to demonstrate how to implement some basic textual search techniques, it still doesn’t optimize for performance. We are still using extra space with our ngram index (storing each word) and are using O(n^2) to intersect the arrays of matches. It would be better to keep our indices sorted so we can have faster intersection time.

- Distributed indices would be a good idea. I briefly mentioned this above, but we have all the tools necessary such that we can also create indices of ngrams to documents instead of words. We can then use these ngram indices to filter out all documents that don’t match a user’s query, then use a pointer to another index to do the textual search within the document.

- Better ranking metrics. Additionally, my ranking algorithm is very basic and is based purely on distance and spatial locality. We could perhaps do something more useful, like score a Result differently depending on how many words apart each Word was. Also, because we use reuse the context variable, our maximum lookup range is small and likely wouldn’t even cover a sentence.

Here’s the full implementation so you can play around with it: Search Engine Series V – Multi-word matches and highlighting